Today

- Inference on the coefficients

- F-tests

Inference on the coefficients

With the addition of the Normality assumption,

where is the variance of the estimate with plugged in for .

Leads to confidence intervals and hypothesis tests of the individual coefficients.

Also under Normality the least squares estimates of slope and intercept are the maximum likelihood estimates.

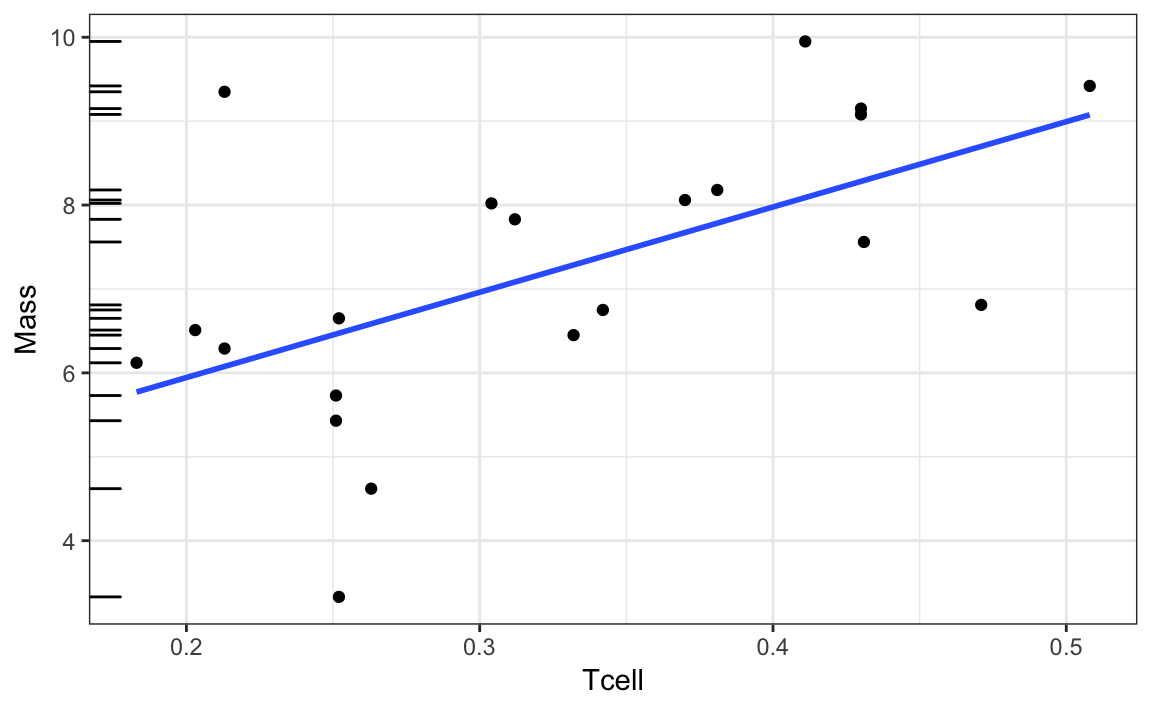

Weightlifting birds

Recall the model:

= 10.165

What’s the t-statistic for testing the null hypothesis ?

summary(slr)

#>

#> Call:

#> lm(formula = Mass ~ Tcell, data = ex0727)

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -3.1429 -0.7327 0.3448 0.7472 3.2736

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) 3.911 1.112 3.517 0.00230 **

#> Tcell 10.165 3.296 3.084 0.00611 **

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 1.426 on 19 degrees of freedom

#> Multiple R-squared: 0.3336, Adjusted R-squared: 0.2986

#> F-statistic: 9.513 on 1 and 19 DF, p-value: 0.006105Prediction

Consider some new observation with explanatory value . The true response is, with expected value

There are two things we might be interested in:

- estimating the mean response at this value,

- predicting the response at this value,

For both cases the point prediction is,

Confidence interval on the mean response

When estimating the mean response, uncertainty only comes from the uncertainty in our estimates of the slope and intercept.

Leads to confidence intervals of the form

“With 95% confidence, we estimate the mean response is between …”

Prediction interval for a new response

When predicting a new response, uncertainty also comes from the variation about the mean.

Leads to prediction intervals of the form

“A 95% prediction interval for the response is …”

(I don’t like the wording “With 95% probability, …” because it isn’t quite correct, part of our uncertainty is still uncertainty in the estimation of parameters, not just uncertainty from the random error.)

General Idea: Partitioning the variation

We see variation in the response. We want to attribute that variation to different sources: variation due to the mean varying according to our regression model, and variation due to the random error.

Partition of variation

Can show Many notations:

Total SS = TSS = =

Residual SS = RSS = SSE = =

Regression SS = SSR = = =

Degrees of freedom

The degrees of freedom for each sum of squares are also additive

R-squared

is simply the proportion of variation in the response explained by the model

In simple linear regression is the square of the Pearson correlation between and .

Next Week

I’m out of town, Trevor, your TA, will lead lecture and lab.

I’ll be reachable by email, but will have limited time to respond, especially on Tue and Wed.

You don’t need to print the notes, Trevor will bring a packet for the week for you on Monday (and I’ll post them online as well).

Multiple linear regression:

- Matrix setup

- Least squares estimates

- Properties of the least squares estimates

Read along in Chapter 2 of the textbook.